Self-assessment test as a measure of speaking proficiency

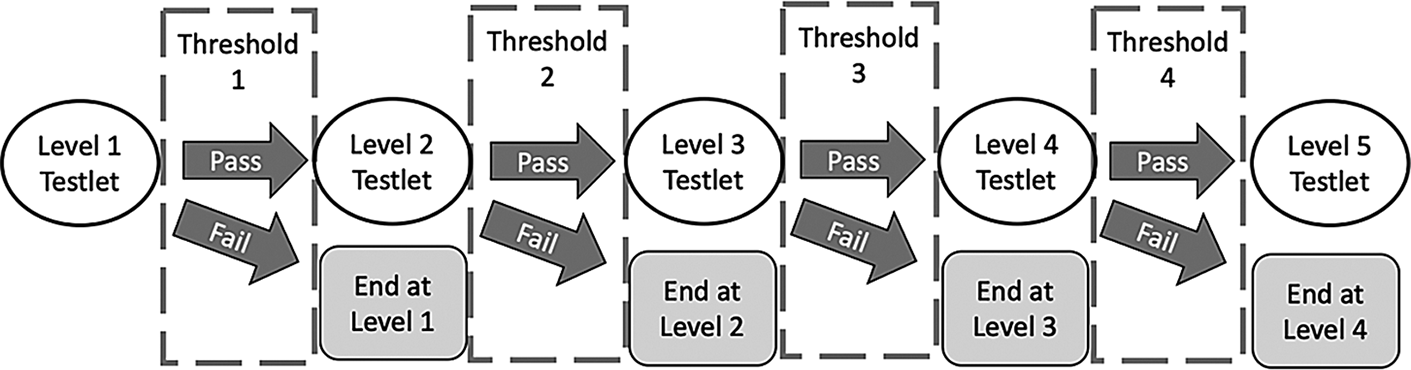

Sequential selection process of the self-assessment

Validating a self-assessment test as a measure of speaking proficiency

In meta-analyses on second language (L2) learning, students’ self-assessments of speaking proficiency have been found to have only moderate Pearson correlations with rigorously designed tests of speaking proficiency such as the ACTFL Oral Proficiency Interview – computer (OPIc). This study demonstrated that such correlations may be attenuated by using statistical methods that do not account for the ordinal nature of self-assessment measures. With a sample of 807 L2-Spanish learners, we found that the polyserial correlation between a computer-adaptive self-assessment test and the OPIc was higher than the corresponding Pearson correlation. We demonstrated how a continuation-ratio model provided far richer and more informative ways to assess the validity of the self-assessment tests than distilling the relationship down to a single correlation coefficient. A one-unit increase in the OPIc was associated with a 131% increase in the odds of passing thresholds on the self-assessment test. We argued that self-assessment tests make intuitive sense to—and promote the agency of—L2 learners and also cost effective, so they are appropriate for use in low-stakes L2-proficiency assessments.

PIs: Paula Winke and Susan Gass

CSTAT collaborator: Steven J. Pierce